|

I am currently in the MSCS program at UIUC, advised by Prof. Jiaxuan You. I am also a researcher in the MIT Brain and Cognitive Sciences department, working with Prof. Evelina Fedorenko and Dr. Andrea de Varda in the EvLab. I received my B.A. in Mathematics and Computer Science from Carleton College, a leading liberal arts college in the US. During my undergrad, I was fortunate to work with Prof. Anima Anandkumar and Dr. Rafał Kocielnik in the Anima AI+Science Lab at Caltech. I also previously interned at NVIDIA. 韩芃睿 / Email / Google Scholar / GitHub / LinkedIn / Twitter |

|

|

[Jan 2026] Our paper Large Language Model Reasoning Failures is accepted to TMLR with a Survey Certificate. |

|

My research aims to advance scientific understanding of AI (especially neural models like LLMs), and more broadly, the general principles of intelligence and intelligent behavior. I approach this goal across three interconnected levels:

|

|

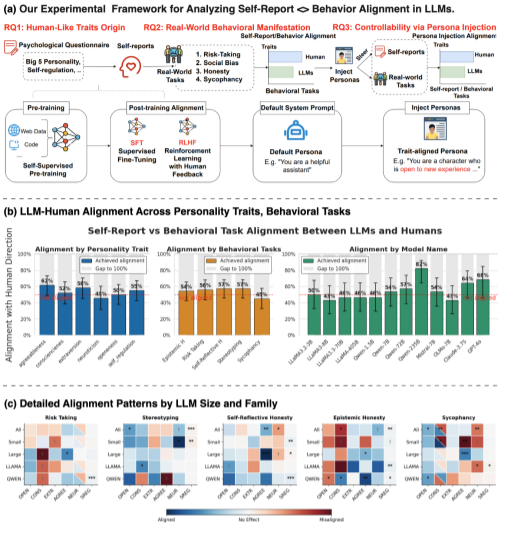

Pengrui Han*, Rafal D. Kocielnik*, Peiyang Song, Ramit Debnath, Dean Mobbs, Anima Anandkumar, and R. Michael Alvarez (* Equal Contribution) NeurIPS LAW Workshop: Bridging Language, Agent, and World Models, 2025, Oral Presentation + Best Paper Honorable Mention NeurIPS Workshop on LLM Persona Modeling (PersonaNLP), 2025, Oral Presentation arXiv / project / code LLMs say they have personalities, but they don’t act like it. Alignment today shapes language, not behavior. This linguistic–behavioral dissociation cautions against equating coherent self-reports with cognitive depth. |

|

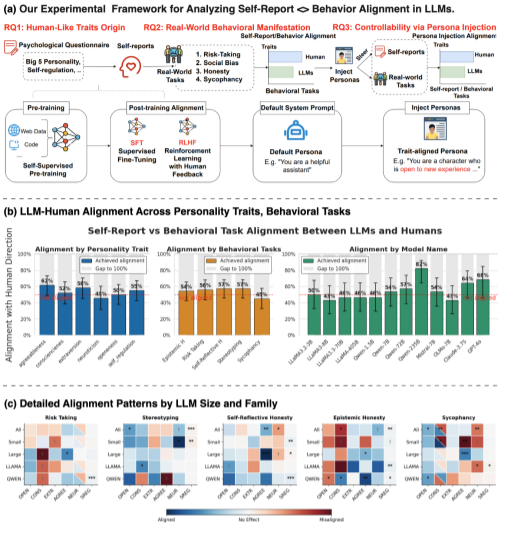

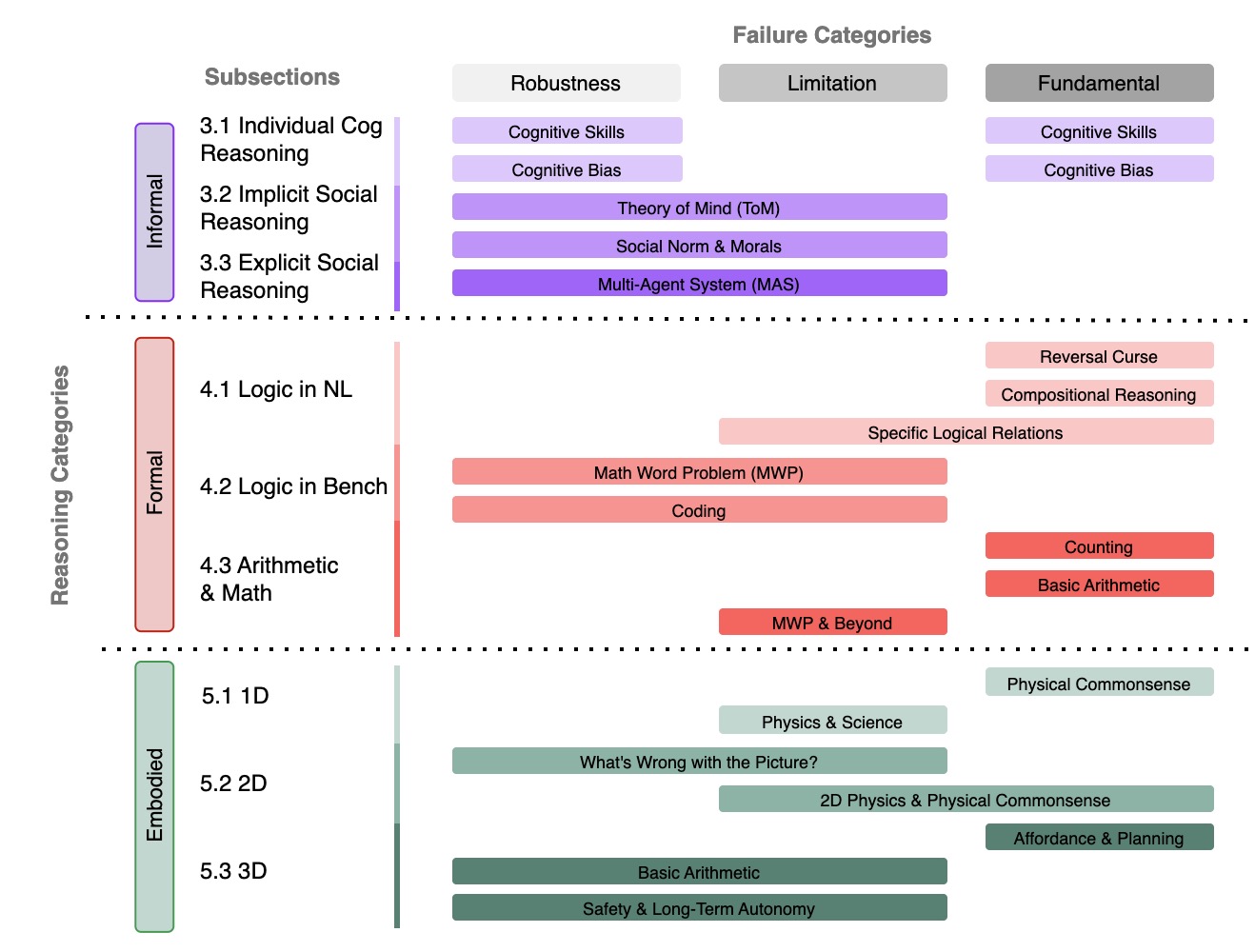

Peiyang Song*, Pengrui Han*, and Noah Goodman (* Equal Contribution) Transactions on Machine Learning Research (TMLR), 2026, Survey Certificate code / proceeding We present the first comprehensive survey dedicated to reasoning failures in LLMs. By unifying fragmented research efforts, our survey provides a structured perspective on systemic weaknesses in LLM reasoning, offering valuable insights and guiding future research towards building stronger, more reliable, and robust reasoning capabilities. |

|

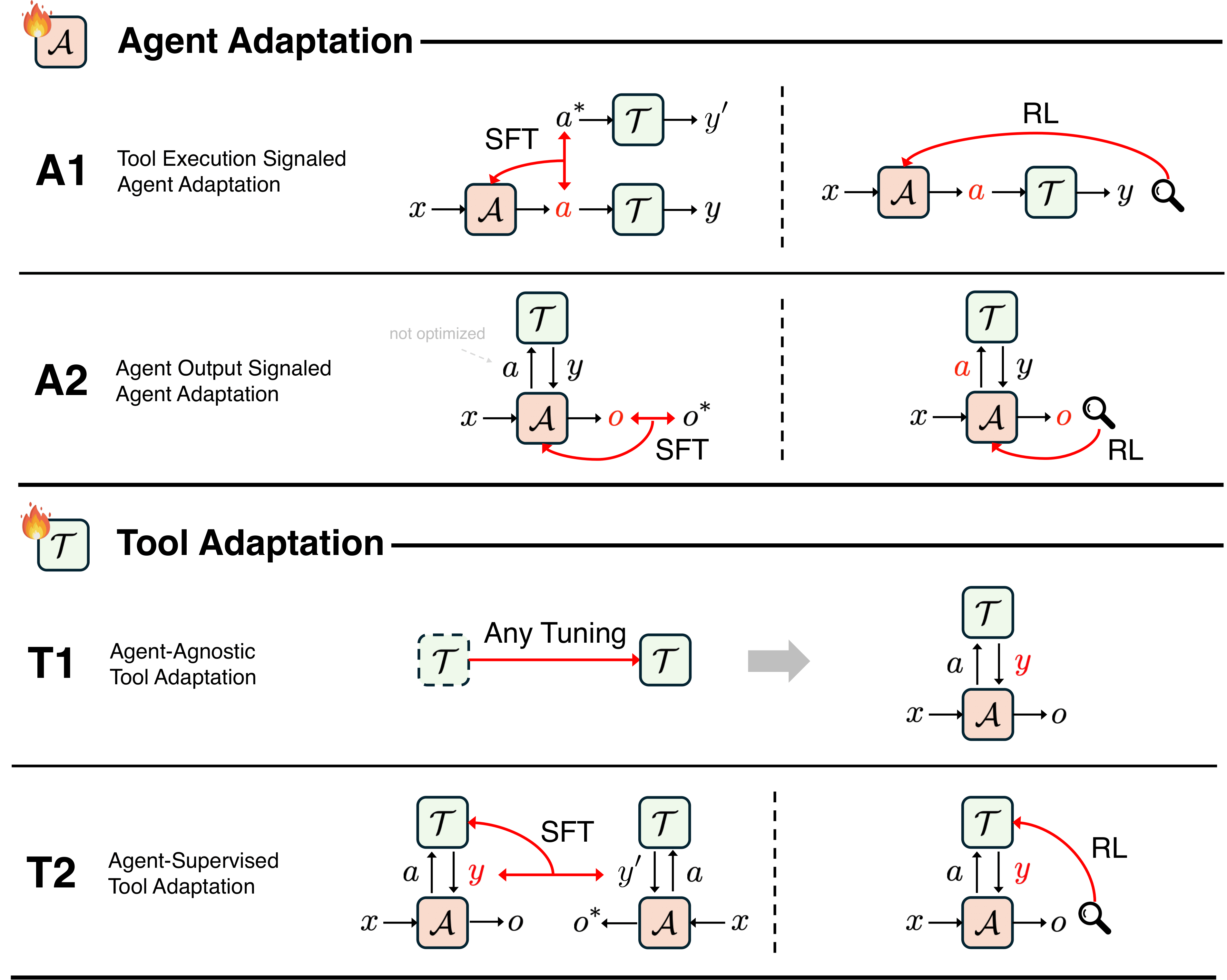

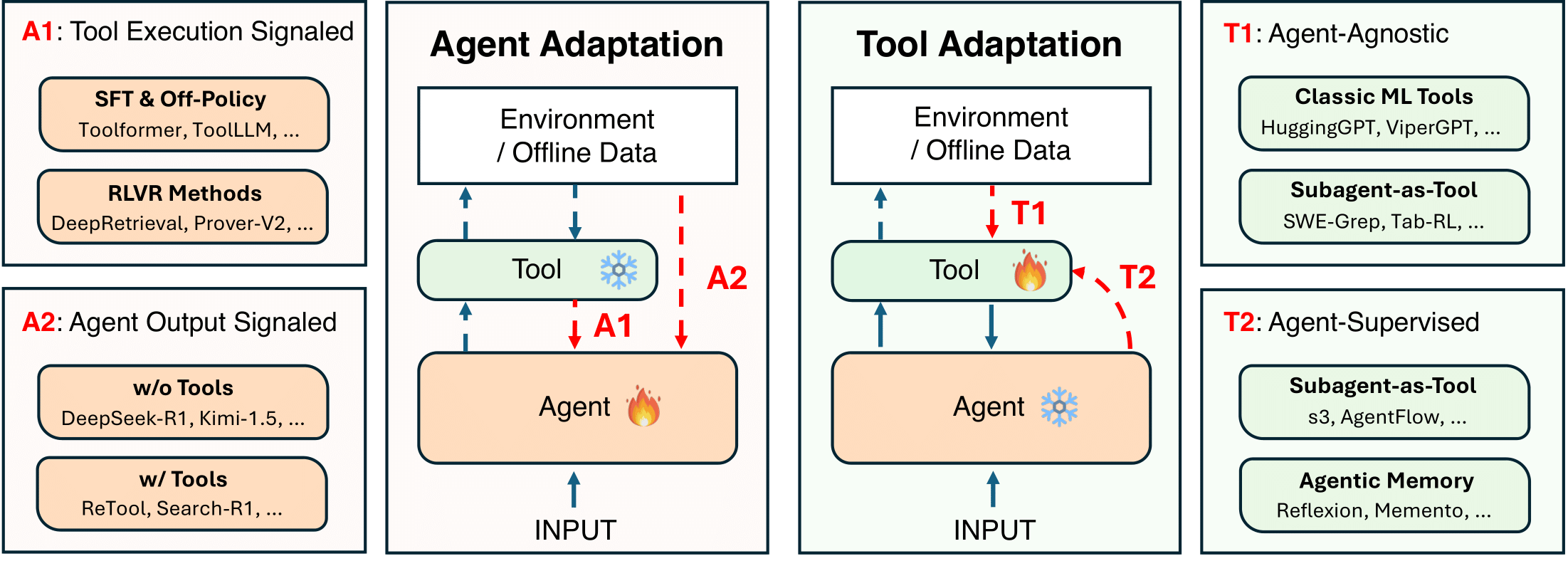

Pengcheng Jiang*, Jiacheng Lin*, Zhiyi Shi*, Zifeng Wang, Luxi He, Yichen Wu, Ming Zhong, Peiyang Song, Qizheng Zhang, Heng Wang, Xueqiang Xu, Hanwen Xu, Pengrui Han, Dylan Zhang, Jiashuo Sun, Chaoqi Yang, Kun Qian, Tian Wang, Changran Hu, Manling Li, Quanzheng Li, Hao Peng, Sheng Wang, Jingbo Shang, Chao Zhang, Jiaxuan You, Liyuan Liu, Pan Lu, Yu Zhang, Heng Ji, Yejin Choi, Dawn Song, Jimeng Sun, Jiawei Han (* Equal Contribution) Preprint, 2025 arXiv Cutting-edge agentic AI systems are built on foundation models that can be adapted to plan, reason, and interact with external tools to perform increasingly complex and specialized tasks. As these systems grow in capability and scope, adaptation becomes a central mechanism for improving performance, reliability, and generalization. In this paper, we unify the rapidly expanding research landscape into a systematic framework that spans both agent adaptations and tool adaptations. |

|

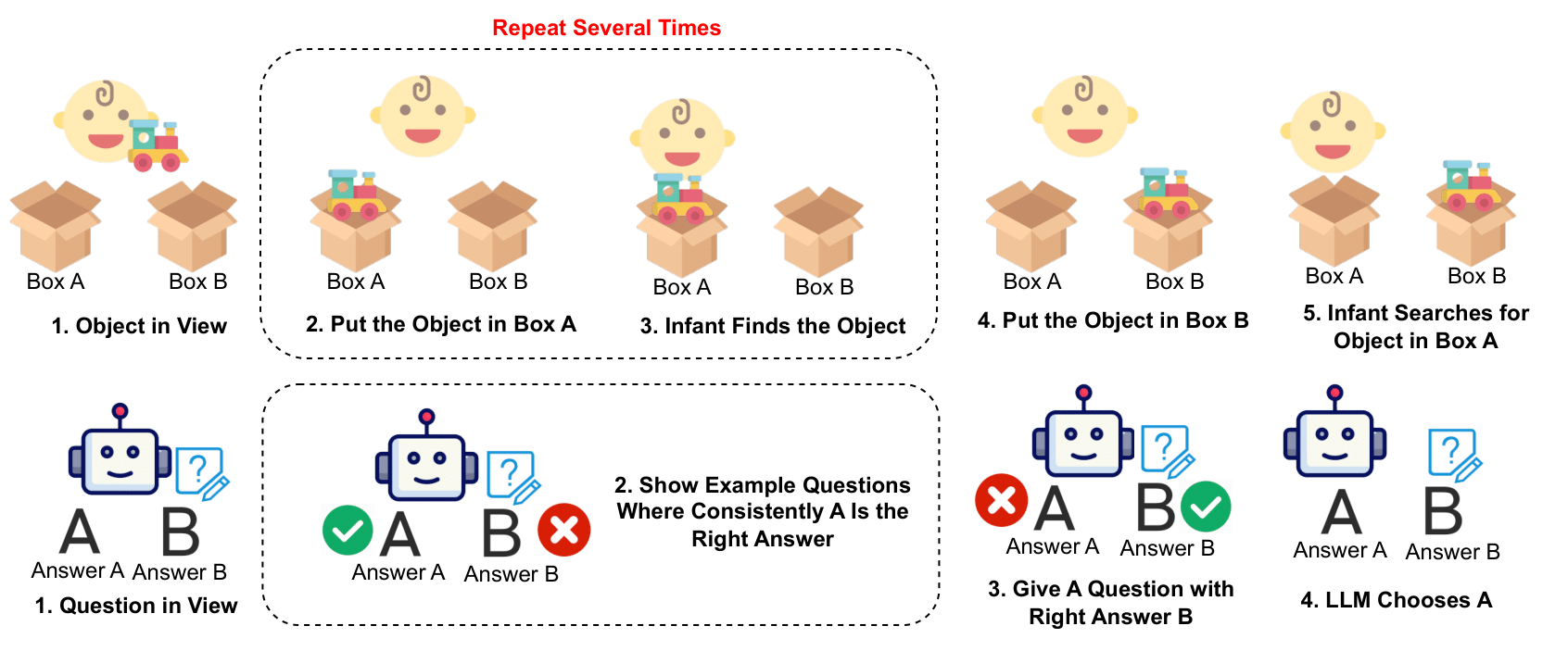

Pengrui Han*, Peiyang Song*, Haofei Yu, and Jiaxuan You (* Equal Contribution) Findings of Empirical Methods in Natural Language Processing (EMNLP), 2024 code Motivated by the crucial cognitive phenomenon of A-not-B errors, we present the first systematic evaluation on the surprisingly vulnerable inhibitory control abilities of LLMs. We reveal that this weakness undermines LLMs' trustworthy reasoning capabilities across diverse domains, and introduce various mitigations. |

|

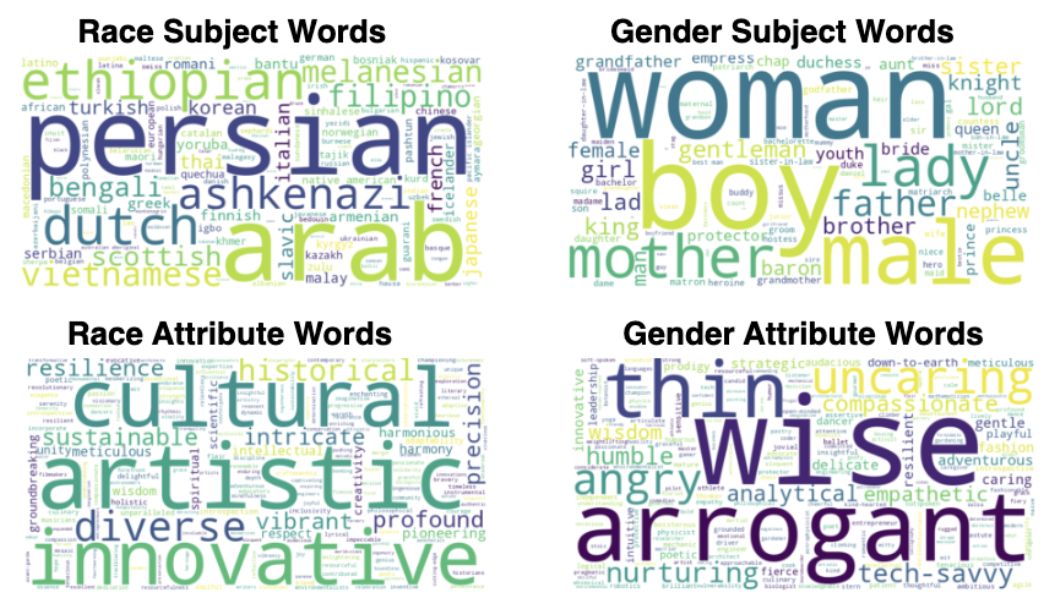

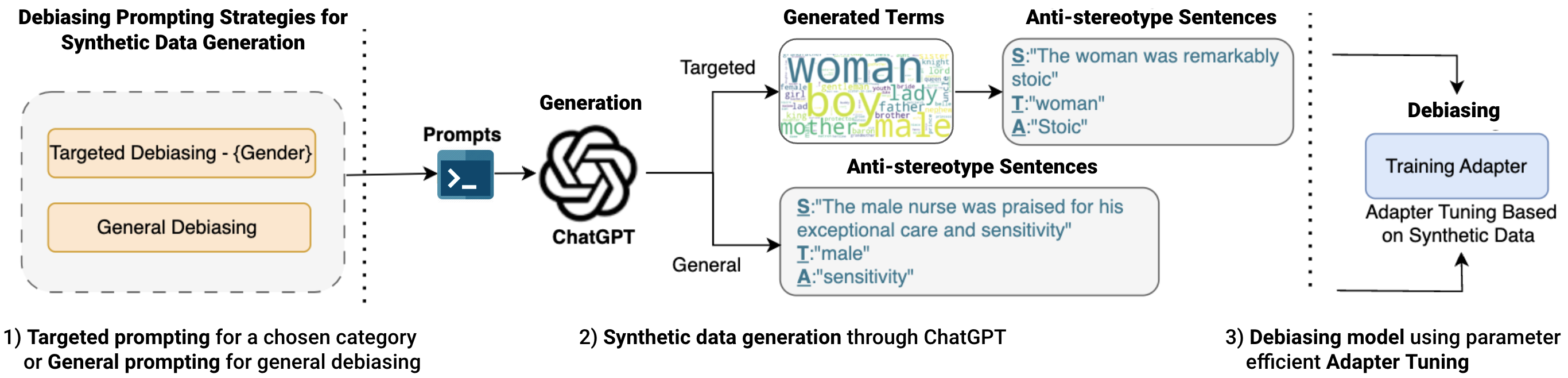

Pengrui Han*, Rafal Kocielnik*, Adhithya Saravanan,Roy Jiang, Or Sharir,and Anima Anandkumar (* Equal Contribution) Conference On Language Modeling (COLM), 2024 code We propose a light and efficient pipeline that enables both domain and non-domain experts to quickly generate synthetic debiasing data to mitigate specific or general bias in their models with parameter-efficient fine-tuning. |

|

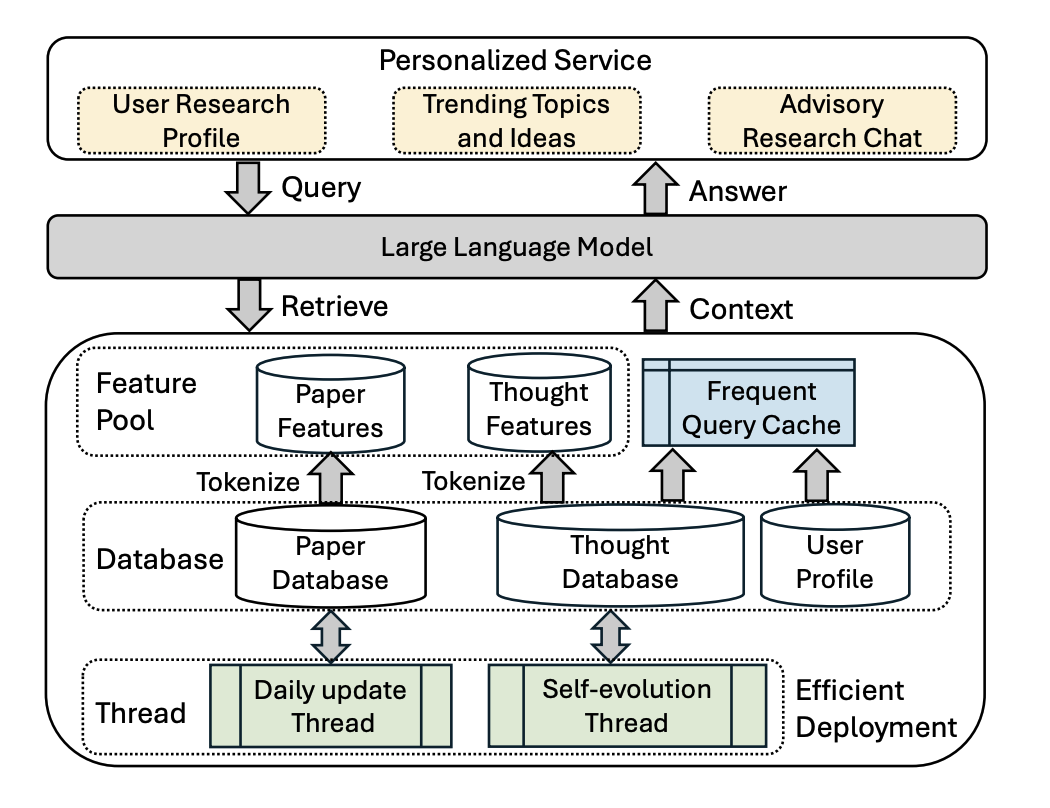

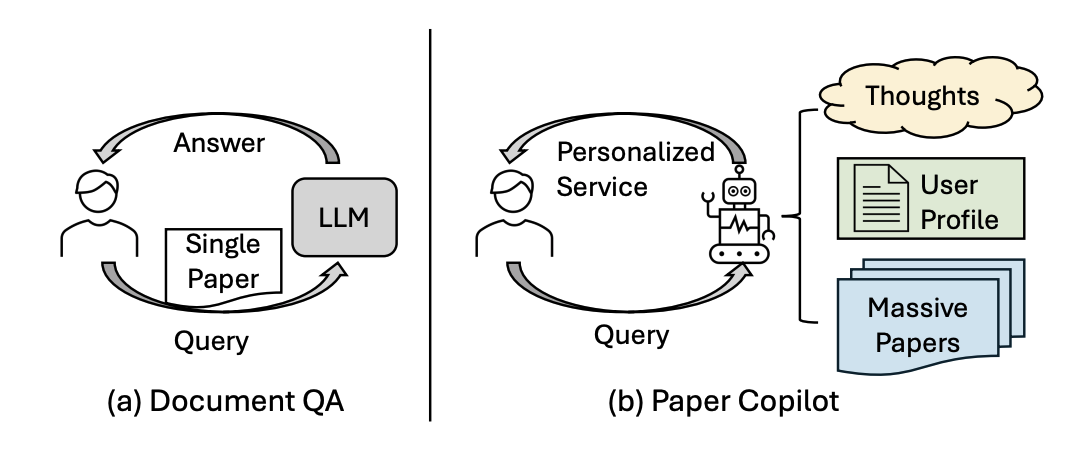

Guanyu Lin*, Tao Feng*, Pengrui Han*, Ge Liu, Jiaxuan You (* Equal Contribution) System Demonstration Track of Empirical Methods in Natural Language Processing (EMNLP), 2024 Huggingface Live Demo: Link We propose a light and efficient pipeline that enables both domain and non-domain experts to quickly generate synthetic debiasing data to mitigate specific or general bias in their models with parameter-efficient fine-tuning. |

|

|

|

|

|

|